AI-enabled toys and tools for young children are already on the market, yet the guidance available to help product teams design developmentally appropriate experiences for the youngest children remains limited. Most existing recommendations address children broadly—often lumping together ages birth through 17—making it difficult for designers to understand what “developmentally appropriate” actually means for the youngest users.

To better understand this landscape, the Digital Wellness Lab convened a one-day workshop in July 2025, sponsored by the Harvard Radcliffe Institute for Advanced Study. We brought together 15 U.S.-based experts in child development, product design, and online safety to examine existing guidance and identify where the gaps are most acute.

What We Found

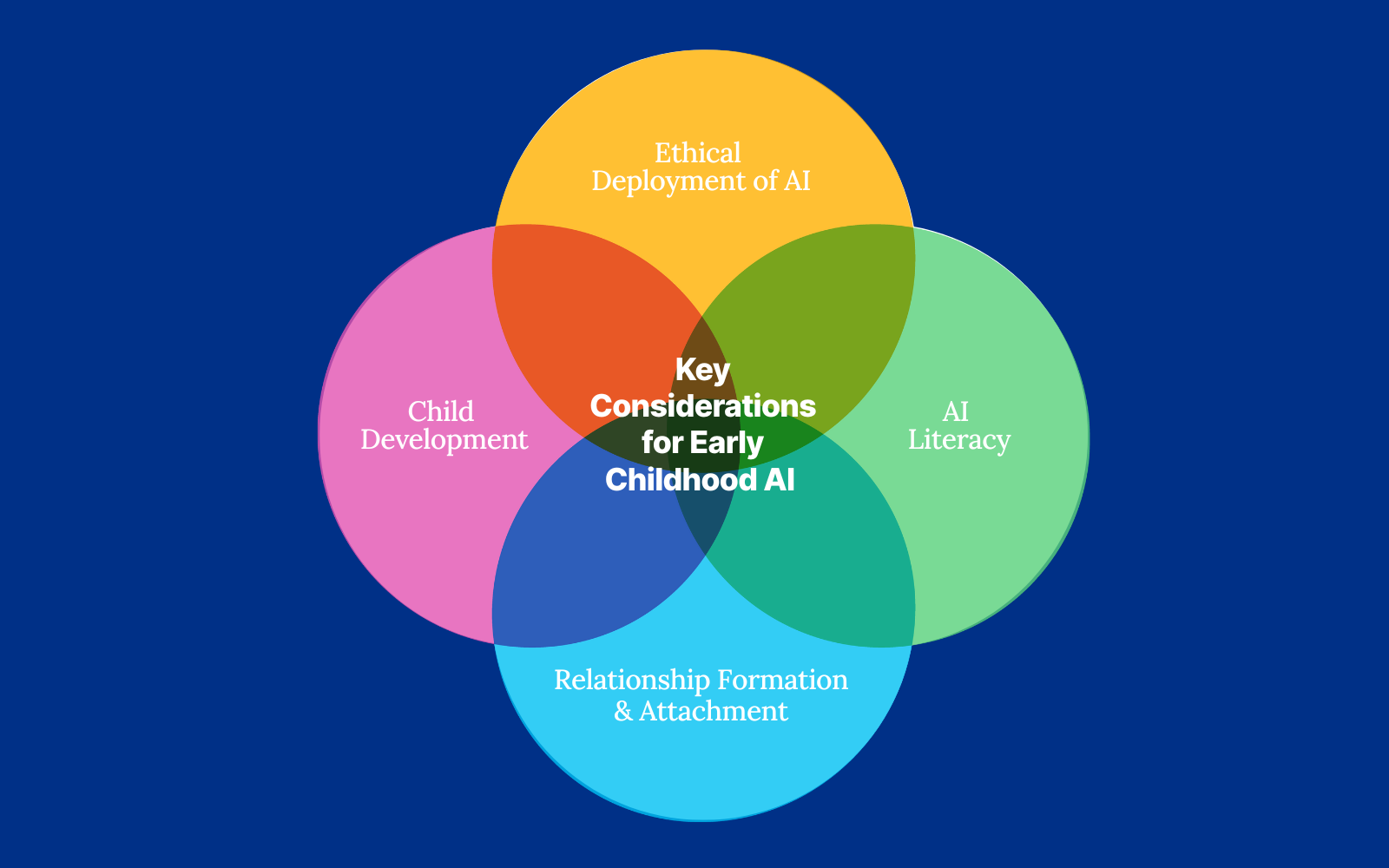

Before the workshop, we analyzed over 30 publicly available global policy and advocacy documents addressing AI and children. The distribution of topics was striking:

Domain | Coverage |

| Ethical Deployment of AI Privacy, bias mitigation, and safety protection for young users. | 65.79% |

| Child Development How AI products can impact emotional, social, and cognitive development in young children. | 19.3% |

| AI Literacy Helping children develop a basic understanding of what AI is and isn’t, including its limitations and how to question the information it provides. | 9.65% |

| Relationship Formation & Attachment The emotional impact and formation of relationships with AI-driven characters or non-human agents. | 5.26% |

The emphasis on privacy and safety is understandable, but areas like AI literacy (helping children understand what AI is and isn’t) and relationship formation (how children form attachments to responsive, interactive agents) have received far less attention. These gaps are particularly significant for early childhood, when children are still learning to distinguish fantasy from reality and are forming foundational models for relationships.

What We Heard

In interviews with industry professionals, we heard consistent frustration. Current guidance, they told us, is often vague, contradictory, or simply doesn’t account for young children’s developmental needs.

As one put it: “They’re assuming that a 5-year-old will be able to navigate a voice-based interface just because talking is easy for a child. But the developmental challenge is not the ability to talk—it’s the ability to comprehend that this is a fictional, generative character, not a real person.”

Another noted: “Being child-friendly is not the same thing as being developmentally appropriate. And that distinction is sometimes hard for ed-tech founders without the education background to understand.”

Areas That Emerged for Further Guidance

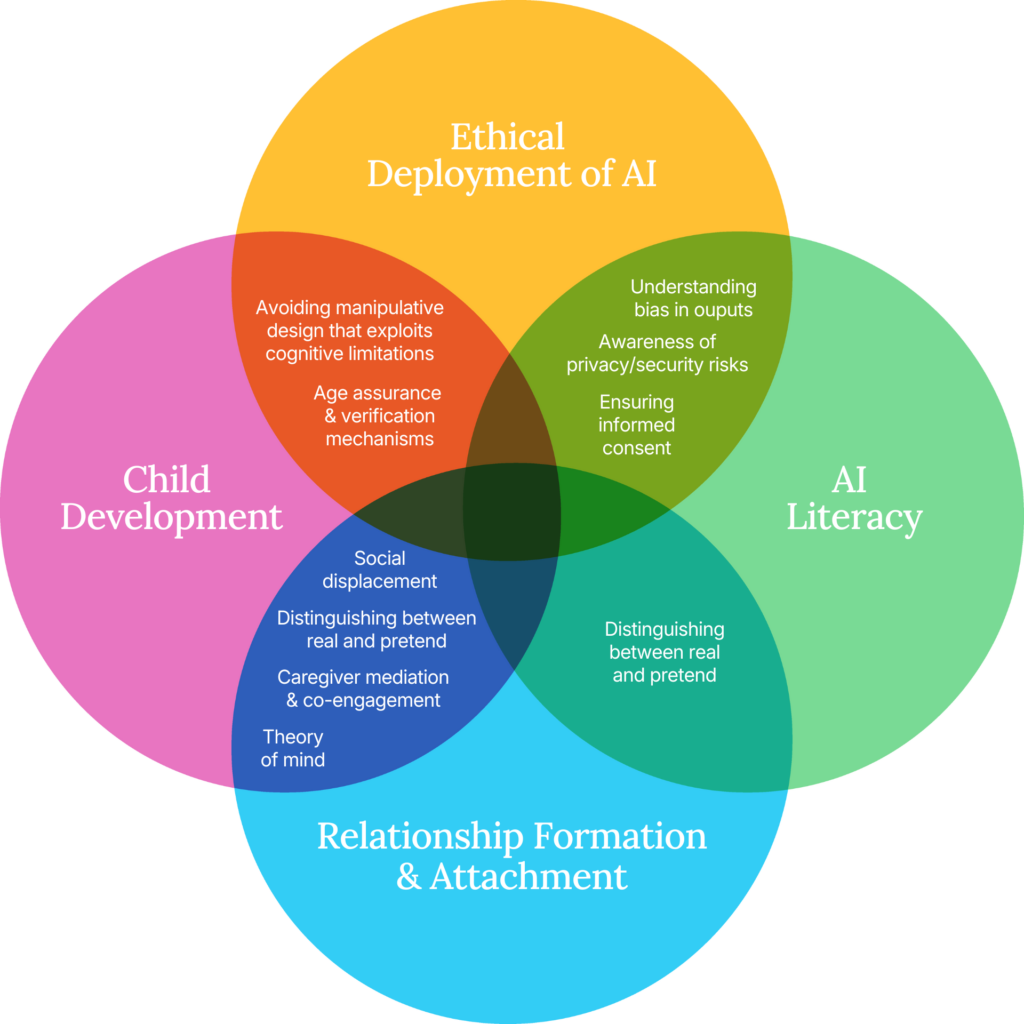

Through small-group work and structured discussion, workshop participants identified key considerations in four interconnected areas where clearer, more specific guidance could help product teams.

Domain

Key Considerations

Ethical Deployment of AI

Beyond privacy and safety basics, how do we address bias mitigation and content moderation for young children who can’t self-advocate?

- Regulation and accountability

- Bias mitigation and algorithmic fairness

- Accessibility and inclusion

- Ethics of advertising and monetization

- Technical safeguards

- Societal impact considerations

Child Development

How do we ensure AI interactions support early skills like language development, emotional regulation, and social connection?

- Impact on identity and autonomy

- Skill-building and language development

- Physical development and motor skills

- Cognitive impacts (memory, executive function, attention)

AI Literacy

How can products help young children develop a basic understanding of what AI is and isn’t—ideally through experiences they can interpret without adult explanation?

- Teaching how AI works (logic, training data, limitations)

- Spotting hallucinations

- Empowerment through design and creation

- Creative agency and design thinking

Relationship Formation & Attachment

How do we design engaging play experiences without encouraging overreliance or confusion about what’s “real”?

- Emotional attachment to and anthropomorphization of AI

- “Parasocial” relationships with AI

- Trust calibration

One of the clearest takeaways was how interconnected these areas are. For example, how a single design choice, such as how an AI character responds to a child’s question, touches all four domains at once.

This diagram (PDF) illustrates where design decisions sit at the intersection of multiple considerations.

What’s Next

This workshop was a starting point. Participants surfaced important questions and, in several areas, perspectives diverged: How do we balance robust data protections with the reality that AI products need to learn from user interactions to improve? Is framing an AI agent as a friendly non-human character developmentally helpful, or does it create new confusion?

We’re continuing to analyze the workshop feedback and refine the discussion into guidance that is specific, feasible, and actionable for product teams and policymakers. In 2026, we expect to release a prototype decision-support tool to partners for hands-on testing and feedback.

The question is no longer whether young children will interact with AI—that’s already happening. The question is whether those interactions will be shaped with their development in mind.